We cannot use an analytical storage system for transactional requirements and vice versa. But have you ever wondered why is that so?

Transactional vs Analytical storage system

Transactional storage (ex - MySQL, Postgres, etc.) is used to work with day-to-day data having frequent updates and inserts into the DB.

for example, a flight booking system will be using transactional storage systems for their requirements as there are many frequent requests for updates/inserts/deletes such as flight cancellation, flight change, flight booking, etc.

On the other hand, Analytical storage systems are used to work with historical data and to get some analysis out of a large set of data.

These systems mostly work with read queries only and frequent updates are not required.

for example, considering the same flight booking system, suppose sales were less for Dec-2022 month and a requirement came for finding some analysis on why sales were less based on the previous five years of data. here we are not doing any update operations but we just need to run some queries on huge sets of data.

Now, the question comes why there are separate systems for transactional and analytical requirements.

It's because transactional systems are generally monolithic systems that cannot handle big data storage and query, it's very fast and suitable for handling quick writes/updates/read queries on individual rows or small datasets.

20-30 years back from now, transactional systems were capable enough for both requirements because of the small size of the data but now to handle big data queries a distributed system is required for structured data which is read-optimized and can run queries parallelly on different parts of the data to get results faster.

1 such system/tool is Hive which basically is an open-source data warehouse.

What is Hive?

It is an open-source data warehouse that provides an SQL-like interface for querying large sets of data stored in a structured way in files for big data analytics.

Hive works on top of Hadoop and each query runs as a MapReduce job internally on files stored in HDFS.

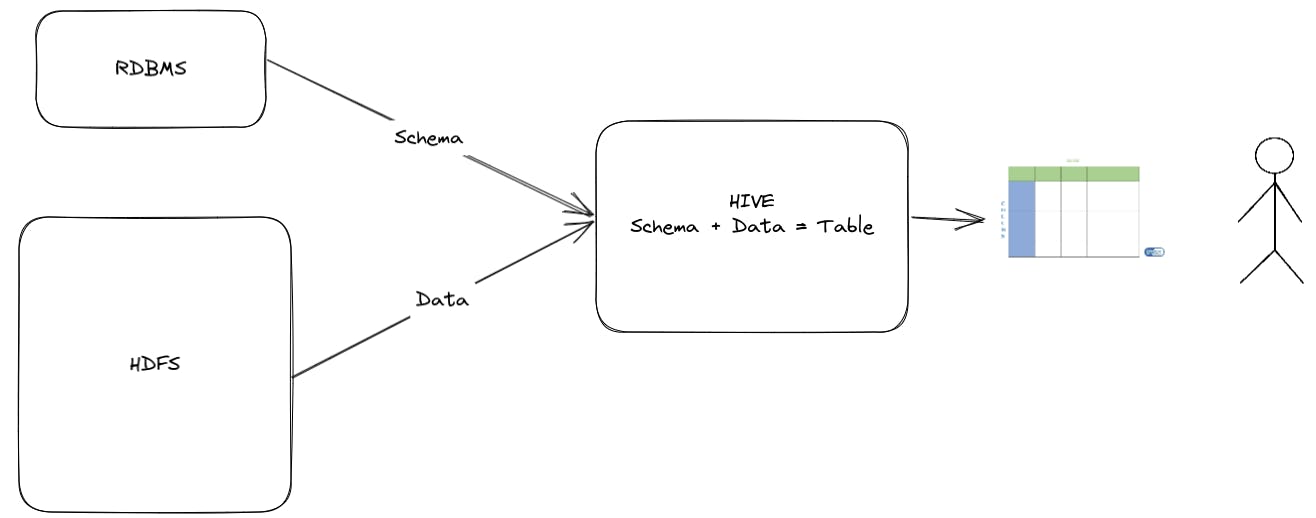

A hive table is having mainly two parts

1. Metadata( schema of the table)

which is stored on an RDMS such as MySQL. it is stored on RDBMS and not in HDFS because HDFS does not support updates and also RDBMS is much faster for small data like table schema.

2. Actual Data

actual data is stored on HDFS and each line and field is terminated by some delimiter (need to mention while creating a table for a file). by default fields are terminated by ',' and lines are terminated by '\n'.

When we run any query to see the data of a hive table a MapReduce job is triggered and data is fetched from HDFS data nodes, then the schema is fetched from RDBMS and imposed on the data to be presented in a tabular format.

Optimizations in Hive

Hive mainly provides 3 types of optimizations

1. Structure-level optimizations

The idea here is to design tables in such a way that queries are faster while executing.

Partitioning

we can partition the data based on 1 or more columns. it is logical nothing logical grouping of data. when we create a partitioned table in Hive a subfolder is created for each partition column value.

So whenever a query is executed with where clause as 1 of the partitioned column value, the search space is reduced and execution becomes faster.

Bucketing

It is basically the division of the data based on a value of a column. It is not a local grouping of data and each bucket have similar sizes of data in each, unlike partitioning where different partition can have different sizes.

In Hive on bucketing a separate file is created for each bucket and while data ingestion the destination bucket depends on a hash function and a particular column value on which table is bucketed.

2. Query Level optimizations

most expensive queries in Hive are join queries where multiple tables are joined together to give the result. The number of unique columns on which join is performed is equal to the number of MapReduce jobs that will be triggered.

We can optimize a join query -

2.1 by using less number of unique columns

2.2 using bucketed and partitioned columns as join columns

2.3 By using map-side joins

In map-side joins, only mappers work and reducers are not required. it can only be achieved if only 1 big table is present and all other tables are small tables (that can fit in memory as a map) so that the whole small table goes in memory to different data nodes where parts of the big table are present.

Using Map Side join only inner-join and Big table outer joins are possible, small table outer joins are not possible.

2.4 Bucket map joins

2.5 Sort Merge Bucket Map Join (SMB join)

3. Syntactical optimizations for better management

By using window functions for complex queries, virtual tables, and complex data types such as array, map, and struct provided by Hive.